Integrating AI into software development and testing is now standard practice, offering significant gains in speed, efficiency, and quality. For technology leaders, the challenge is not whether to use AI, but how to control and manage its adoption to ensure responsible, effective, and secure outcomes.

In this article, we address the key strategies and best practices that enable tech leaders to control the process and prevent risks associated with Shadow AI.

Table of Contents

Common Scenario That Creates the Shadow AI

You start by getting a team licence on, say, OpenAI. Immediately after, your engineers start using an API key in the IDE. Initially, that seems like a good way to manage costs, but also to control your data.

However, you soon realize that engineers are using their personal keys on other AI platforms—the ones they prefer, are just experimenting with, or simply have features that OpenAI does not.

Now, you don’t have to discourage this necessarily, but it does raise concerns about control and privacy issues, doesn’t it?

So, how do you, as a technology leader, manage this? What are the pros and cons? Are there any potential pitfalls and traps that you must address immediately?

(FYI, this was the genuine question asked by a member of our community, a Group CTO of a major international corporation who faced this challenge most recently. When we took a deeper look, we found this is a repeating scenario that many tech leaders struggle with.)

General Mitigation and Control Strategies

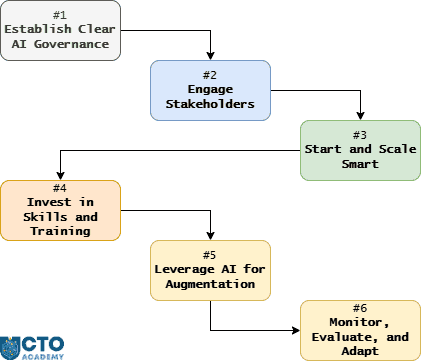

Generally, there are 6 strategies you should implement at the very beginning of the process:

- Establish Clear AI Governance (i.e., policies, ethical standards, etc.).

- Engage Stakeholders by forming AI committees (for mid-sized to large organizations) and maintaining transparent communication.

- Start and Scale Smart (Responsibly):

- STEP 1: Identify repetitive, high-friction tasks in the software development lifecycle (SDLC) that can benefit most from AI.

- STEP 2: Begin with small, well-defined pilots

- STEP 3: Gather feedback

- STEP 4: Refine your approach before scaling to broader use cases.

- Invest in Skills and Training by upskilling both teams and leaders.

- Leverage AI for Augmentation, not Replacement, by enhancing human roles and maintaining human oversight. In other words, use AI to automate routine tasks like test case generation, bug triage, and performance monitoring, so your teams can focus on creative problem-solving, strategy, and innovation. At the same time, make team members accountable for critical decisions, test strategy, and interpreting AI-generated insights, especially for nuanced or high-stakes scenarios.

- Monitor, Evaluate, and Adapt.

TIP: Use sandboxes where possible to test AI deployments in controlled environments to identify and mitigate risks before full-scale rollout.

The scenario we mentioned earlier mirrors a common challenge faced by technology leaders as AI tools proliferate:

How to balance innovation and autonomy with the need for control, privacy, and cost management?

Here’s how others in the industry are addressing similar issues.

How Tech Leaders Are Managing Multi-Platform AI Usage

Centralized API Key Management

Organizations increasingly opt for centralized management of API keys, using platforms or internal proxies to control access. For example, some teams implement a proxy service that authenticates developers through an identity provider (IDP), hiding the actual API keys and consolidating usage under organizational control.

Tools like Eden AI offer multi-API key management, letting teams organize, monitor, and switch between keys for different projects or providers from a single interface. This approach enables granular usage tracking, cost optimization, and better security.

Policy and Governance

Clear internal policies about which AI platforms and keys are permitted for use in development and testing are necessary. This includes specifying approved providers, outlining data handling requirements, and establishing processes for requesting access to new tools.

Some organizations allow experimentation with new platforms but require engineers to register external API usage with IT or security, ensuring transparency and risk assessment.

But there are also some more rigorous practices, as some of our members noted. There is an example of a highly sensitive organization that monitors prompts sent to ChatGPT and flags any potentially sensitive personal data leaving their networks. However, even in this instance, they encourage the use of dev tools.

Another example is a company that deployed its own internal chatbot (leveraging AWS Bedrock) while banning egress of any IP or data outside of its network. This included the use of Cursor and Copilot tools (as source code would inevitably exit the proprietary network).

Some organizations use customized and adjusted MDM policies to control apps installed on proprietary mobile devices. However, this implies that external (personal) devices are strictly prohibited. Fortunately, there are IDEs now with enterprise features (JetBrains, NXP eIQ® AI) that allow at least some form of use of BYOD models.

But overall, as one of our members concluded, increased organizational control leads to a more expensive and less convenient system. That tradeoff must be considered before laying down the general policy.

Monitoring and Auditing

Regular audits of API usage help identify shadow IT (unapproved tools or keys) and potential data privacy risks. This is especially important as personal API keys can bypass organizational controls, leading to fragmented data governance.

Education and Communication

Tech leaders must educate their teams about the privacy, security, and compliance implications of using personal or external AI tools while encouraging responsible experimentation within defined boundaries.

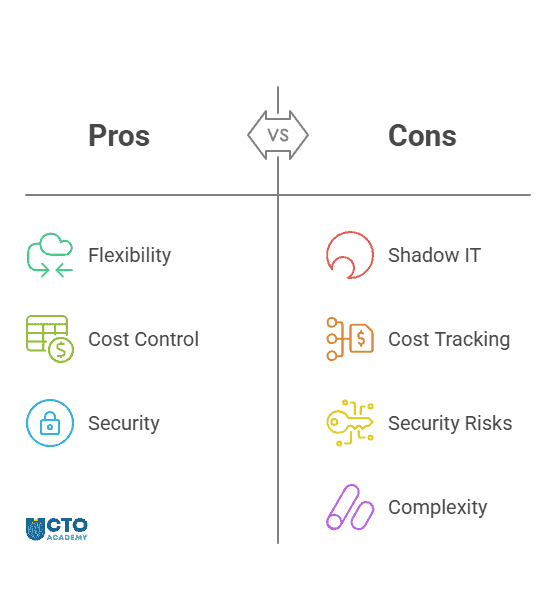

Pros

- Flexibility and Innovation

- Cost Control (if centralized)

- Security and Privacy (if controlled)

Cons and Pitfalls

- Shadow IT Risks

- Fragmented Cost Tracking

- Security Vulnerabilities (API keys as access points).

- Operational Complexity and Overhead due to multiple providers and keys management (especially when scaling).

Key Recommendations

- Implement a centralized API key management solution (internal proxy or third-party tool) to consolidate access, monitor usage, and control costs.

- Establish clear policies on approved AI platforms and key usage, balancing innovation with security and compliance.

- Educate and engage your team on the risks and responsibilities of using AI tools, especially regarding data privacy and organizational policies.

- Regularly audit and review API usage to detect and address shadow IT, ensuring all AI activity aligns with company standards and legal requirements.

In summary, by combining centralized controls with clear policies and ongoing education, you provide innovation freedom while maintaining the oversight necessary to protect your organization’s data, privacy, and budget.